Storing LOBs In and Out of a Row- Large Objects

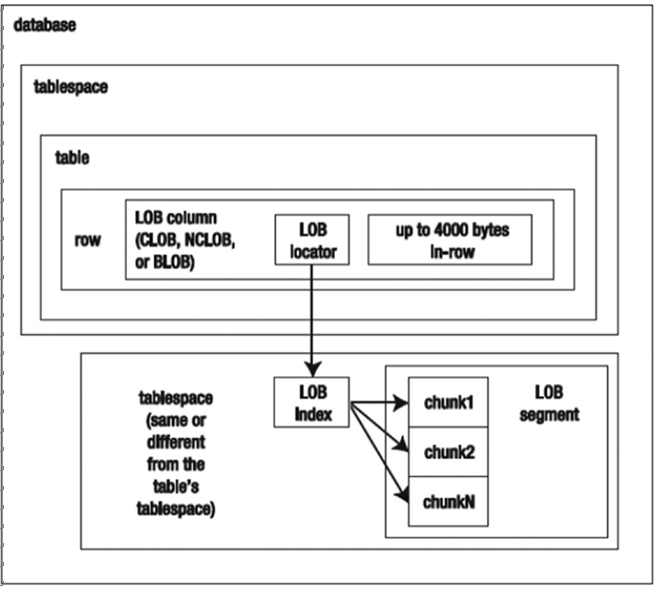

By default, up to 8,000 bytes of a LOB column are stored inline with the table row. Prior to 23c it was up to 4,000 bytes. If the LOB is more than 8,000 bytes, then Oracle automatically stores it outside the row data. The main advantage of storing a LOB in a row is that small LOBs require less I/O, because Oracle does not have to search out of the row for the LOB data.

However, storing LOB data in a row is not always desirable. The disadvantage of storing LOBs in a row is that the table row sizes are potentially longer.

This can affect the performance of full-table scans, range scans, and updates to columns other than the LOB column. In these situations, you may want to disable storage in the row.

For example,you explicitly instruct Oracle to store the LOB outside the row with the DISABLE STORAGE IN ROW clause:

SQL> create table patchmain( patch_id number, patch_desc clob , lob_file blob)

lob(patch_desc, lob_file) store as (tablespace lob_data disable storage in row);

ENABLE STORAGE IN ROW is enabled by default and will store up to 8,000 bytes of a LOB in the table row. The LOB locator is aways stored inline with the row even if you specify DISABLE STORAGE IN ROW.

You cannot modify the LOB storage in a row after the table has been created. The only ways to alter storage in a row are to move the LOB column or drop and re-create the table. This example alters the storage in a row by moving the LOB column:

SQL> alter table patchmain move lob(patch_desc)

store as (enable storage in row);

You can verify the in-row storage via the IN_ROW column of USER_LOBS:

SQL> select table_name, column_name, tablespace_name, in_row from user_lobs;

TABLE_NAME COLUMN_NAME TABLESPACE_NAME IN_ROW

PATCHMAIN PATCHMAIN LOG_FILE PATCH_DESC

LOB_DATA YES LOB_DATA YES

Viewing LOB Metadata

You can use any of the DBA/ALL/USER_LOBS views to display information about LOBs in your database:

SQL> select table_name, column_name, index_name, tablespace_name from all_lobs order by table_name;

Also keep in mind that a LOB segment has a corresponding index segment:

SQL> select segment_name, segment_type, tablespace_name from user_segments where segment_name like ‘SYS_LOB%’ or segment_name like ‘SYS_IL%’;

In this way, you can query both the segment and the index in the DBA/ALL/USER_ SEGMENTS views for LOB information.

You can use the DBA/ALL/USER_SEGMENTS to get the space consumed by the LOB segment. Here is a sample query:

SQL> select segment_name, segment_type, segment_subtype, bytes/1024/1024 meg_bytes from user_segments;

You can modify the query to report on only LOBs by joining to the DBA/ALL/USER_ LOBS view:

SQL> select a.table_name, a.column_name, a.segment_name, a.index_name ,b.bytes/1024/1024 meg_bytes

from user_lobs a, user_segments b where a.segment_name = b.segment_name;

You can also use the DBMS_SPACE.SPACE_USAGE package and procedure to report on the blocks being used by a LOB.